Elman Mansimov

alignDRAW, 10 Year Anniversary (2015 - 2025)

Overview

"alignDRAW images lack photorealistic polish, but they carry the aura of Niépce’s first photograph: hesitant, imperfect, epochal."

- Darius Himes

alignDRAW Book (2025)

alignDRAW brings together five essays that revisit Elman Mansimov’s 2015 experiment in generating images from text using neural networks. The book presents this early stage of text-to-image AI as both a technical and cultural event, where computation began to participate in image-making. The essays by Lev Manovich, Shana Lopes, Eva Jäger, Mat Dryhurst, and Darius Himes situate Mansimov’s work within longer histories of visual representation, from photography to conceptual art, and examine how alignDRAW blurs the boundaries between research and artistic practice. Code experiments like Mansimov’s are consistent with the logic and aims of contemporary art: process over product, methodology made visible, participation invited, and new aesthetic territories explored. If contemporary art institutions claim to care about where genuinely new forms of seeing and making emerge, this project argues, they must broaden their attention to include these forms of computational creation.

The book will be available for delivery early 2026 for Ξ0.02015 + shipping.

Interview with Darius Himes

This interview brings together Elman Mansimov, creator of alignDRAW, and curator and International Head of Photographs at Christie's, Darius Himes, to discuss the tenth anniversary of the project that first demonstrated how text could generate images through neural networks. The discussion traces the project’s journey from a 2015 research paper to its later recognition as an artwork. Through reflections on authorship, intention, and the shifting boundaries between science and aesthetics, Mansimov and Himes explore how alignDRAW fits within the long arc of image-making, from the invention of photography to today’s generative systems.

Five New Essays

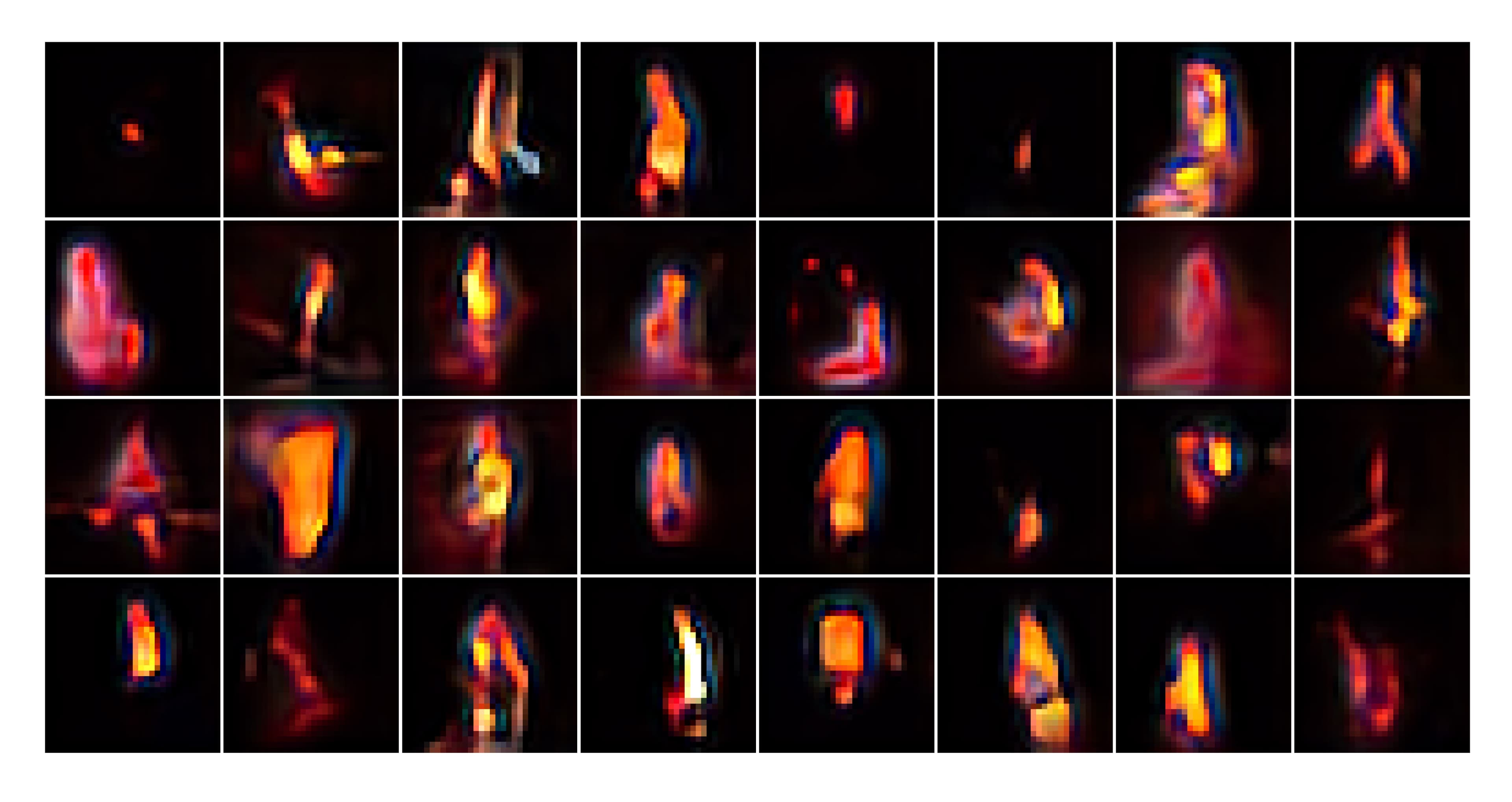

In 2015, Elman Mansimov published a conference paper about a model he and collaborators had developed called alignDRAW. The system could generate ( blurry, half-formed ) pictures from simple text prompts, a dog blob, a blurry bus, a mirage of a herd of elephants. It was fragile and strange, but unforgettable. alignDRAW was one of the first serious attempts at what we now take for granted: the ability to type words and receive images.

I think it’s important to validate code experiments like alignDRAW as artworks of consequence. Mansimov’s GitHub repository and paper ought to be understood in the tradition of process-based art. The point is not only the finished artifacts, but a view into the reasoning, methods, and limitations behind them. What’s new about the networked age is that we can follow the development of these aesthetic and engineering experiments in real time, should we take the time to look.

Anyone closely following machine learning over the past decade knows that it’s the academic publishing platform arXiv, and esoteric corners of Twitter, where you’re most likely to encounter glimpses of the world to come. Our feeds have become stages for engineers who display more mastery and awareness of the defining medium of our time (software) than any traditional artist. These code experiments emerge from all manner of sources: anonymous accounts testing prospective applications in public, academics demonstrating papers, or a steadily growing ecosystem of creative engineers working within or alongside larger tech companies to quickly test new interactions with existing tools.

The alignDRAW model revisited

To mark the tenth anniversary of his original 2015 paper, Elman Mansimov recreated the alignDRAW model using contemporary hardware and software while preserving its original architecture and training data. The reconstruction was not intended to improve or modernize the model, but to re-stage it as a historical experiment, and the uncertain emergence of images from text prompts that once defined the early days of generative AI. By revisiting the model’s limitations, including its low resolution and visible artifacts, Mansimov foregrounded alignDRAW’s role as both a technical document and an aesthetic event, bridging the gap between scientific reproducibility and artistic reenactment.

alignDRAW prints

To accompany the tenth-anniversary presentations, we produced a short film in which Elman Mansimov and Alejandro Cartagena visit Griffin Editions, New York’s renowned print studio, to follow the making of the alignDRAW dye-sublimation prints. The camera stays with the printers as they profile and proof, transfer inks under heat and pressure, and finish each work by hand. The film links code to craft: pixels become pigment; latent concepts become objects; the model’s low-resolution artifacts are honored rather than corrected.

Exhibitions, 2023 - 2025

From 2023 to 2025, we exhibited alignDRAW at Paris Photo (Paris, 2023), HERE at No. 9 Cork Street (London, 2023), Christie’s Online (2023), during Art Basel in Switzerland (2024), and in a permanent installation at our exhibition space in Notting Hill. The work has also been acquired and exhibited by the Worcester Art Museum (Massachusetts, 2024).

From the Archives...

Video primer: If you’re coming to this story fresh, a good start is Vox’s explainer “How programmers turned the internet into a paintbrush” (June, 2022) - a 13‑minute primer on how modern text-to-image models like DALL·E, Midjourney, and Imagen work, why they matter and how they began with alignDRAW.

The original experiment: Generating Images from Captions with Attention (Mansimov et al., 2015) is the alignDRAW paper that first showed how a network could compose novel scenes from text by iteratively drawing on a canvas while attending to each word.

The inflection point at scale: Zero‑Shot Text‑to‑Image Generation (Ramesh et al., 2021, “DALL·E”) is from an OpenAI team including Ilya Sutskever (then Chief Scientist and a co‑founder of OpenAI) and Mark Chen (OpenAI research lead; later Head of Frontiers Research). The paper’s introduction explicitly credits Mansimov et al. (2015) as the start of modern text‑to‑image methods.